New research can predict how plots, images, and music affect your emotions while watching a movie.

Every time I think AI can’t surprise me anymore, new research arrives to prove me wrong. Yesterday, scientists at the MIT Media Lab announced that they’ve taught a machine how to manipulate our emotions–a technology that they believe can help filmmakers create more engrossing movies and TV.

In a blog post published in collaboration with strategic consulting firm McKinsey & Company, the researchers said that they used a deep neural network to watch thousands of small slices of video—movies, TV, and short online features. For each slice, the neural network guessed which were the different elements that made a moment emotionally special, constructing an emotional arc. To test their accuracy, the team got human volunteers to watch the same clips, tagging their reaction and labeling which elements—from the music to the dialogue to the type of imagery shown on screen–had a stronger weight in their emotional response. This information helped them fine-tune the resulting model until it got really accurate at guessing what triggers human emotions.

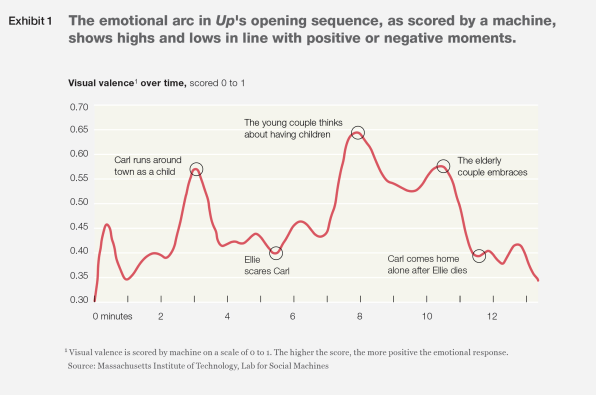

So what does a machine learning model see when it watches the opening act of Pixar’s Up!? The first 12 minutes of the movie show how Carl and his wife Ellie meet as kids, play, grow up, and eventually marry. They fail to have children but lovingly grow up to be old together and, finally, we watch Ellie fall sick and die. At this point, everyone in the movie theater, without exception, is weeping.

According to the researchers, they didn’t develop this technology to create stories with AI. In fact, they describe the first movie written entirely by artificial intelligence–Sunspring, which opened in 2016–as awkward and basically a failure. Instead, they believe that right now it’s better to use AI “to enhance [the work of film writers]by providing insights that increase a story’s emotional pull—for instance, identifying a musical score or visual image that helps engender feelings of hope.”They may be right. Grammy-Nominee Alex Da Kid took a very similar approach earlier this year when he created a hit record using IBM Watson’s cognitive abilities. IBM’s AI studied the last five years of hits in the Billboard charts and combined that with data from other media, from newspapers to social media. Talking to Forbes‘s Bernard Marr, Da Kid said that Watson “showed [him]how emotionally volatile we as humans are and have been, particularly over the last five years.” By looking at these patterns, Da Kid was able to come up with themes that would resonate in the public. He then used Watson’s music generation algorithms to help compose his themes, getting suggestions on different musical elements that will connect better with his audience. The result was a success, with his AI-enhanced single getting in the top five of iTunes Hot Tracks chart.

In MIT’s case, the researchers believe that this new technology could do exactly the same thing, helping storytellers “thrive in a world of seemingly infinite audience demand.”

But–and there’s always a big hairy “but” behind most corners–if you’re a budding scriptwriter wanting to ask advice of HAL-KUBRICK, there’s a plot twist. Talking over email, lead project researcher Eric Chu told me that “aside from putting the code and datasets online, there aren’t current plans to make a public version of the tool.” [Sorry. Sad song plays. Fade to black.]

I wouldn’t be surprised if a factory like Disney or maybe even Netflix is already playing with similar technologies. I don’t mind if all movies make us weep or laugh or perch on the edge of our seats, but if AI can be used to manipulate our emotional responses at the movie theater, it could also be used by governments and companies to do the same thing in every single piece of media we consume. I can’t wait for Charlie Brooker’s episode of Black Mirror about this–without an AI’s help.

–

This article first appeared in www.fastcodesign.com

Seeking to build and grow your brand using the force of consumer insight, strategic foresight, creative disruption and technology prowess? Talk to us at +9714 3867728 or mail: info@groupisd.com or visit www.groupisd.com