The author and eminent design researcher Don Norman examines how poorly designed software spread panic in Hawaii–and offers tips for avoiding such incidents in the future.

“BALLISTIC MISSILE THREAT INBOUND TO HAWAII. SEEK IMMEDIATE SHELTER. THIS IS NOT A DRILL.” That was the false alert sent to people in Hawaii, January 13, 2018.

What happened? “An employee pushed the wrong button,” Hawaii Governor David Ige told CNN.

How do false alerts happen? As soon as I read about the false alert of a missile attack on Hawaii I knew who would be blamed: some poor, innocent person. Human error, would be the explanation. But it would be wrong. The real culprit is poor design: poor, bad, incompetent.

When some error occurs, it is commonplace to look for the reason. In serious cases, a committee is formed which more or less thoroughly tries to determine the cause. Eventually, it will be discovered that a person did something wrong. “Hah,” says the investigation committee. “Human error. Increase the training. Punish the guilty person.” Everyone feels good. The public is reassured. An innocent person is punished, and the real problem remains unfixed.

The correct response is for the committee to ask, “What caused the human error? How could that have been prevented?” Find the root cause and then cure that.

To me, the most frustrating aspect of these errors is that they result from poor design. Incompetent design. Worse, for decades we have known how proper, human-centered design can prevent them. I started studying and writing about these errors in the 1980s–over 30 years ago.

What really happened? Reports suggest that the system is tested twice a day with the person doing the test selecting the test message from a list. In this case, it is believed, the person accidentally selected the wrong message.

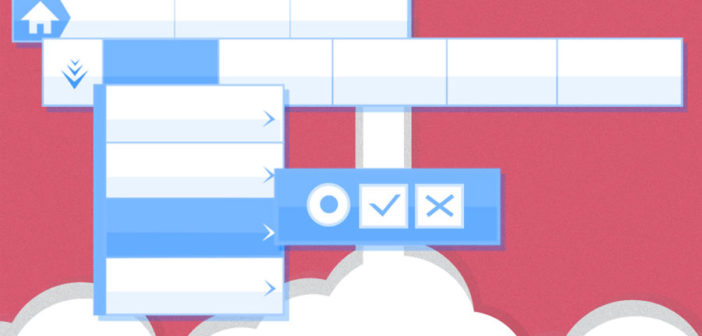

This is the screen that set off the ballistic missile alert on Saturday. The operator clicked the PACOM (CDW) State Only link. The drill link is the one that was supposed to be clicked. #Hawaii pic.twitter.com/lDVnqUmyHa

— Honolulu Civil Beat (@CivilBeat) January 16, 2018

When choices are life-threatening, there are several easy options to avoid the problem. The main, elementary design rule is: never do a dangerous (or irreversible) action without requiring confirmation,* ideally by a second person who is separated from the person doing the action.

- The test message should be sent only when the system is in “test mode.” This mode could be identical to the real mode in every way except that the choice of messages would be limited to test ones. With each message stating clearly something like “THIS IS ONLY A TEST.” If dangerous messages are not in the list of possible ones, no serious error can occur.

- The alert system should normally be in TEST mode. It should only go into LIVE mode when a real signal is intended. The change–and then the choice of message should be confirmed by a second person.

- Require a second person to confirm the sending of a message–real or test–before it actually gets sent. The second person should be completely separated from the initiating person. It is like the two-key system used for numerous safety situations (for example in triggering an explosion for mining). Two locks are required, with two separate keys activated at the same time. The locks are far enough apart that one person cannot activate both. Similarly, for this test, the two people should have to be separately logged on, ideally at different physical locations.

The more the confirming person knows about the situation, the greater the chance of error. If the confirming person doesn’t know why the message is being sent, this will automatically lead to questions–precisely the response that will catch problems. Will it slow the response in case of real emergencies? Yes, but if the implementation is done well, the delay will be just a few seconds.

Two more points. It is important to test any system before it is made operational. The test has to use the real operators, ideally under an induced state of emergency, with intense time pressure. Several different scenarios should be tested, including ones where there are many alarms going off, where people are urgently requesting information from one another, and where the people sending and confirming the message are continually being interrupted, pressured to rush, and badly needed elsewhere. The second point is corrective action. When an erroneous message is sent out, the system has to allow for immediate correction (which also requires the two-step confirmation). The ease or difficulty of getting permission and sending a critically important but dangerous message should be matched by the ease or difficulty of getting permission to send a correction.

It is time to design our systems differently. We know how to prevent errors such as the false Hawaiian missile alert. Design assuming that people will err when taking actions under severe emotional stress and in a rush. Make sure that a single person’s actions cannot lead to harm. Test the resulting design under conditions as real and as difficult as is possible. Systems seldom work as the designer intended them to. That’s why we test early and then throughout the design stage.

Finally, use professional human-machine specialists from the fields that produce them: Human factors, ergonomics, human-computer engineering, and safety. We should not have to suffer more serious errors caused by a simple, single error.

*UPDATE 1/16/18 12 p.m.: The latest reports about the error indicate that the operator was asked to confirm the choice of message. Confirmations, however, are well-known to be a really bad safety check.

Here is what I wrote in my book Design of Everyday Things (Chapter 5: Human Error? No, Bad Design):

Many systems try to prevent errors by requiring confirmation before a command will be executed, especially when the action will destroy something of importance. But these requests are usually ill timed because after requesting an operation, people are usually certain they want it done. Hence the standard joke about these such warnings:

Person: Delete “my most important file.”

System: Do you want to delete “my most important file”?

Person: Yes

System: Are you certain

Person: Yes!

System: “My most favorite file” has been deleted.

Person: Oh. Damn.The request for confirmation seems like an irritant rather than an essential safety check because the person tends to focus upon the action rather than the object that is being acted upon. A better check would be a prominent display of both the action to be taken and the object, perhaps with the choice of “cancel” or “do it.” The important point is making salient what the implications of the action are.

The early reports indicate that the confirmation did NOT even display the message that was to be sent out! Of course the person wanted to send a message — however, the consequences must be clearly stated. That is why I separated the TEST messages from the REAL ones — different lists.

The screenshot in my article shows that not only are the messages mixed together, but the syntax is horrid: The first words ought to indicate whether it is a test or real — here, each person writing a message invented their own style of title. Bad design — really bad design.

Even the attempt to put in a check was badly done.

Sigh. Please do not let programmers design human interaction: Get professionals who are trained in interaction design and human factors.

–

This article first appeared in www.fastcodesign.com

Seeking to build and grow your brand using the force of consumer insight, strategic foresight, creative disruption and technology prowess? Talk to us at +9714 3867728 or mail: info@groupisd.com or visit www.groupisd.com